(CNN Business) – Google wants to make it easier to find things that are difficult to describe with a few words or a picture.

On Thursday, Google launched a new search option that allows you to combine text and images into a single query. With this feature, you can search for a shirt that is similar to the one in the photo, but type what you want with “polka dots,” or take a picture of your sofa and type “chair” to find a shirt that looks like it.

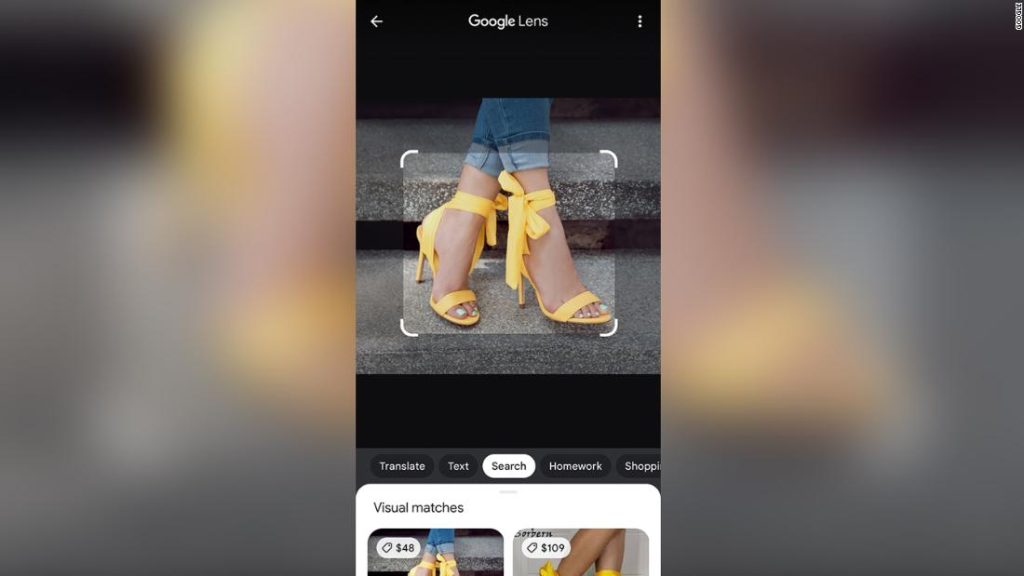

The feature the company calls “Multiple Search” which was shown in Preview in SeptemberNow available to US users in the Google Lens portion of the Google mobile app. Liz Reed, vice president of Google search, told CNN Business that the feature will be considered beta initially. It is expected that it will initially be used for shopping-related searches, although it is not limited to such queries.

“This will be just the beginning,” Reid said.

Multiple Search is Google’s latest effort to make searching more flexible and less limited to on-screen words. Google has always offered an image search engine. There is also Google Lens Which was first shown in 2017 And that it can recognize objects in an image or translate text instantly as seen through the phone’s camera lens. Other efforts in 2020 awarded to users Option croon a song to search for.

How does the new multi-search mode work?

To find multiple searches on the Google mobile app, you need to tap the camera icon on the right side of the search bar, which opens Google Lens. You can take a picture or upload it and then click on the small bar containing the plus sign and the phrase “Add to your search.” This allows you to write the words to better explain what you want.

The multi search mode basically works with AI in different ways. Computer vision infers what is in the image, while natural language processing determines the meaning of the words you write. These results are then aggregated to train a comprehensive system, Reed said.

When Google introduced this type of search in SeptemberThe company has made it clear that it will use a powerful machine learning tool called MUM (which stands for “unified multitasking model”) which was presented last May. Reed said in an interview last week that this wouldn’t be the case initially, but that he might use MUM in the coming months, which he said he believed would help improve the quality of research.

When asked if Google will eventually allow people to use search in more diverse ways, such as combining music and lyrics in a query to find new genres of music, Reed said the company isn’t working on that specifically, but that he’s interested in collecting several contributions in the future.

“Proud web fanatic. Subtly charming twitter geek. Reader. Internet trailblazer. Music buff.”

More Stories

Pluto got a “flip” after colliding with a planetary body

NASA's mission will try to decipher whether it is possible to grow crops on the moon

This is the only human structure that can be seen from space; Few people know her